Invalid partition table in VMWare ESX

Anyone who has expanded a drive in Linux knows it’s a two step process. First, the partition table must be altered to include the new space. Second, the file system must be expanded to make use of the new space within its partition. It’s a fairly straightforward process I’ve done many times, but I ran into an interesting issue when attempting this within VMWare.

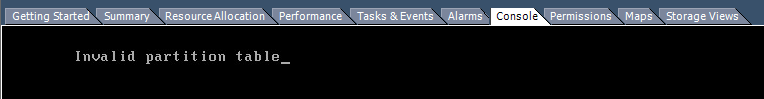

After deleting and recreating the partition table, where the partition to be expanded has the same start block and a new end block, fdisk might state that the partition is in use and the kernel cannot refresh partition data. At this point, the partprobe command can be used or the system can be rebooted to read the new partition information. After rebooting my VM, I saw the following message: Invalid partition table.

For some reason, I was unable to get vSphere to boot a recovery ISO, so I had to shut down the VM and attach it’s volume to another active VM to try and diagnose what went wrong. The problem was that I didn’t set the active partition.

Disk /dev/sda: 64.4 GB, 64424509440 bytes 255 heads, 63 sectors/track, 7832 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Device Boot Start End Blocks Id System /dev/sda1 * 1 7832 60806025 83 Linux Command (m for help): Command (m for help):

That little star(*) was missing and prevented VM Ware from booting this partition. I haven’t run into this before because on a physical machine, most BIOS and EFI, if they cannot find an active boot partition, will just aggressively attempt to find a bootable partition before giving up. VMWare does not.

The behavior of VMWare is more predictable from both a security standpoint and just a general correctness standpoint. Still, surprises like this aren’t welcome during late night scheduled outages. So always remember to make snapshots before modifying disks on any critical production systems.