Upgrading the SSD on an MSI GS60 Laptop

Tech Culture Shock: From America to the South Pacific, and Seattle to Chicago

I’ve spent the past two decades in tech, mostly as a developer or system administrator. In that time, I’ve worked in a variety of different markets, in six different cities, across three different countries. There are, of course, a number of similarities between companies, no matter where you go. But I also found a lot of oddities that were specific to certain regions and markets. People who only work in one market could get used to an IT mono-culture, and may not realize how things operate differently for their counterparts on the other side of the country, or the planet. In this post I will start with my experiences in Chattanooga, Tennessee and Cincinnati, Ohio. I’ll also talk about international markets and tech scenes from my experience in Melbourne, Australia and Wellington, New Zealand. Finally I’ll cover my return to the West Coast working in Seattle, Washington, and my current scene in Chicago, Illinois.

Read MoreWhy I Don't Sign Non-Competes

My first job out of University was in the IT department of a payment processing and debt collection company. My desk was juxtapose to a call center where, all day, I listened to people on welfare collect bad checks and credit card debt from other people on welfare. When several of our sales people left to start their own business, taking many of the company’s customers with them, the company began to have everyone in the office, from those in data entry to customer service, sign a non-compete agreement. It was the first non-compete agreement I refused to sign. Over the course of the next fifteen years, I would be asked to sign non-competes several more times, always prior to employment. I’ve always refused, and until recently, I’ve never been denied a position because of that refusal.

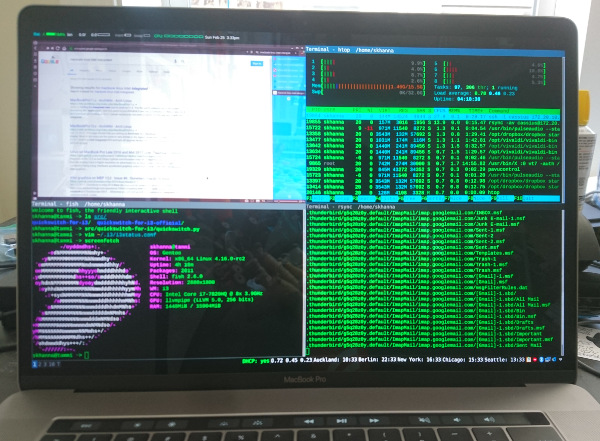

Read MoreLinux on a MacBook Pro 14,3

Since 2012, at the last three jobs I’ve held as a software engineer, I’ve always used Linux natively on my work desktop or laptop. At some companies this was a choice, and at one it was mandatory. Most development shops give engineers the option of either a Windows PC or a Mac upon hiring them. However, my most recent shop did not. I considered simply running Linux in a virtual machine, however VirtualBox proved to be so slow that it was unusable, and I had some EFI booting issues with a demo of VMWare Fusion.

I probably could have worked through those issues, but instead I decided to take the leap and attempt to dual boot into Linux natively. A considerably amount of work has been done in the open source community to get modern Apple hardware working under Linux, much of it documented under the mbp-2016-linux project on GitHub. I was able to leverage quite a bit of that work to get a mostly working development environment. Although some things are still broken, I’m confident I can work through those issues, and hope this post can help other engineers who want to use modern MacBook Pros as powerful Linux development machines.

Read MoreBee2: Automating HAProxy and LetsEncrypt with Docker

In a previous post, I introduced Bee2, a Ruby application designed to provision servers and setup DNS records. Later I expanded it using Ansible roles to setup OpenVPN, Docker and firewalls. In the latest iteration, I’ve added a rich Docker library designed to provision applications, run jobs and backup/restore data volumes. I’ve also included some basic Dockerfiles for setting up HAProxy with LetsEncrypt and Nginx for static content. Building this system has given me a lot more flexibility than what I would have had with something like Docker Compose. It’s not anywhere near as scalable as something like Kubernetes or DC/OS with Marathon, but it works well for my personal setup with just my static websites and personal projects.

Read MorePassword Algorithms

Sometime in 2008, MySpace had a data breach of nearly 260 million accounts. It exposed passwords that were weakly hashed and forced lowercase, making them relatively easy to crack. In 2012, Yahoo Voice had a data breach of nearly half a million usernames and unencrypted passwords. Now you may think to yourself, “I don’t care. I never use my old MySpace or Yahoo account,” but in the case of the Yahoo data breach, 59% of users also had an account compromised in the Sony breach of 2011, and were using the exact same password for both services!

Using leaked usernames and passwords from one service to attempt to gain entry to other services is known as credential stuffing. People should use a different password for every website or service. Password reuse is one of the major ways online accounts become compromised. For the average person, using a password manager to generate unique passwords for every website and app may seem a bit cumbersome or complicated. But there is another way to have unique passwords for every website; passwords that can easily be remembered, yet are difficult to guess. The solution, often discouraged by security experts, is creating a password algorithm.

Read MoreBee2: Creating a Small Infrastructure for Docker Apps

In a previous post, I showed how I wrote a provisioning system for servers on Vultr. In this post, I’m going to expand upon that framework, adding support for Firewalls, Docker, a VPN system and everything needed to create a small and secure infrastructure for personal projects. Two servers will be provisioned, one as a web server running a docker daemon with only ports 80 and 443 exposed, and a second that establishes a VPN to connect securely to the docker daemon on the web server.

Read MoreBee2: Wrestling with the Vultr API

No one enjoys changing hosting providers. I haven’t had to often, but when I have, it involved manual configuration and copying files. As I’m looking to deploy some new projects, I’m attempting to automate the provisioning process, using hosting providers with Application Programming Interfaces (APIs) to automatically create virtual machines and run Ansible playbooks on those machines. My first attempt involved installing DC/OS on DigitalOcean which met with mixed results.

In this post, I’ll be examining Bee2, a simple framework I built in Ruby. Although the framework is designed to be expandable to different providers, initially I’ll be implementing a provisioner for Vultr, a new hosting provider that seems to be competing directly with DigitalOcean. While their prices and flexibility seem better than DigitalOcean’s, their APIs are a mess of missing functions, poll/waiting and interesting bugs.

Read MoreCloud at Cost Part II: The Unsustainable Business Model

Back in 2013, a startup known as Cloud at Cost attempted to run a hosting service where users paid a one-time cost for Virtual Machines (VMs). For a one-time fee, you could get a server for life. I had purchased one of these VMs, intending to use it as a status page. However, their service has been so unreliable that it’s a shot in the dark as to whether a purchased VM will be available from week to week. Recent changes to their service policy are attempting to recoup their losses through a $9 per year service fee. It’s a poor attempt to salvage a bad business model from a terrible hosting provider.

Read MoreLeaving Full Time Jobs

I used to work at the University of Cincinnati and whenever I got frustrated at staff meetings, I’d threaten to move to Australia. After a $300 application fee and a surprisingly short approval process, I had holiday work visa which allowed me to live and work in Australia for a full year. My manager led me to our director’s office. With my resignation letter on his desk, my director simply asked, “Do you want more money?” to which I responded, “I’m moving to Australia.” There were confused looks from the two of them, awkward silence and finally, “No, really … I’m moving to Australia.” It was the first time I had left the relative security of a full-time position, and it wouldn’t be the last.

Read More